I called my post from last week "Results and Final Thoughts"... but after it went up, I had another thought. So that title was a lie! Many people out there analyze various aspects of the results, but I want to look at two things: how many people vote in each category, and how many people vote No Award.

First here are the categories ranked in terms of how many ballots were received:So you'll see that the prose fiction categories pull in the most ballots typically, and the fan activity categories the least. I do think it's interesting that Best Novel (won by A Memory Called Empire) does the best of all the prose fiction categories, and Best Short Story (won by "As the Last I May Know") the worst (except for the Lodestar, won by Catfishing on CatNet), even though reading six short stories is the least amount of work! Best Series (won by The Expanse) gets a lot of votes, which makes me think people are voting based on their general knowledge of the series rather than, say, diligently reading all of Emma Newman's Planetfall books.

You'll also see that the "body of work" categories (those that go to a person, not a specific item), like Best Professional Artist (won by John Picacio), Best Editor (won by Ellen Datlow and Navah Wolfe), and so on, all languish pretty far down, with Best Fanzine (won by The Book Smugglers) coming in lowest of all categories, getting just a third of the ballots of Best Novel. The Best Novel Hugo is the one that has all the prestige attached to it, and that clearly leads to it garnering the most fan ballots, too. Best Dramatic Presentation (won by Good Omens and The Good Place) is probably the least amount of work to cover (I had seen three of the six nominees in Long Form beforehand, for example), which probably helps it.

Next, here are all the categories ranked in terms of how many ballots ranked No Award in first place:Voting No Award in first place usually means one of two things, I would claim. First, it could mean that you find the concept of the category invalid. Every year, I vote No Award for Best Series, Best Editor, and a couple other categories, for example, and leave the rest of my ballot blank. I have some fundamental disagreements with the premises of those categories, and do not think they should be awarded. (Very few Hugo voters agree with me, though, clearly.) It could also mean that you just found everything in that category subpar: this year I voted No Award for Best Short Story, but still ranked finalists below it.

We might guess that Hugo voters agreed with me about Best Short Story, for example, as it had the highest level of first-place No Award votes of any prose fiction category. On the other hand, Best Novella (won by This Is How You Lose the Time War) had the least, indicating a category where every voter but seventeen felt comfortable saying one of the six finalists deserved to win.

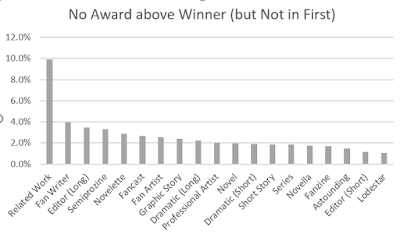

One interesting piece of information given on the results is how many ballots ranked No Award above the eventual winner. This could mean 1) the ballot just No Awarded the entire category, as I did Best Series and Best Short Story, or it could mean that 2) on that voter's ballot, No Award was placed higher than the winner, as was true for me in Best Related Work, where I had No Award in 6th, and the actual winner in 7th (Ng's acceptance speech for the 2019 Campbell Award). (This is reported because if a certain number of ballots include No Award above the winner, No Award wins, even if it doesn't on the normal counting method. I don't think this has ever actually come to pass.)

What this lets us see is particularly controversial winners. For example, we can tell that though Ng's 2019 speech won Best Related Work, over 10% of voters thought (like me) that they'd rather see nothing win than see it win. Best Short Story still ranks highest on this list of all the prose fiction categories, too, potentially indicating a weak set of finalists. (To the extent that we can call a set of finalists 5% of voters objected to weak!)

What this reveals, I would argue, is how many voters believed a category had some basic level of validity (so they didn't put No Award first for whatever reason), but didn't like what actually ended up winning. You'll see that Best Related Work sticks out very prominently by this metric-- almost 10% of voters in that category didn't think the category was bad per se, just the work that won it. In this new metric, the most prominent prose fiction category actually becomes Best Novelette, indicating that N. K. Jemisin's Emergency Skin was arguably a controversial winner. (I didn't rank it below No Award, but I did think it a weak finalist.) Best Fanzine, which was really high on the last list, drops very low, showing that many people are against the category as a whole, but those who did vote in it largely thought the eventual winner a worthy one. And I guess everyone just loved Catfishing on CatNet!

There's one other statistic I want to explore, but I'm not sure if it even represents a real thing. Is there a correlation between how many people vote in a category, and how many first-place No Award votes it receives? Without looking at the data, you might expect a positive correlation: the bigger a category, the more people vote No Award, so a consistent-ish percentage. But in fact, there is an inverse correlation: the bigger a category, the fewer people vote No Award.

One explanation I came up with was perhaps there's just a dedicated core group of No Award voters? So say there are thirty Hugo voters who like using No Award a lot. They would be a bigger percentage of a category with 700 ballots received (4%) than one with 1,800 ballots received (1.5%). But if you look at absolute numbers, there's still a trend, just not as clear of one:

(I think; I'm not statistician, obviously.) The bigger the category, the fewer No Award votes it received. I'm not sure what to make of this fact. Does it indicate that the bigger categories are bigger for a reason, i.e., people view them as more legitimate? What I find particularly interesting, though, is the two outliers: there are just two categories where the number of No Award votes is significantly higher than we would expect based on category size. One is Best Short Story, which I've discussed a couple times already as an outlier, but the other is Best Series (won by The Expanse). I mentioned above that I always No Award this category because I don't believe it's a good idea; for whatever reason, a disproportionate number of other voters are also voting No Award here.

I'd be curious to know if the supposed trend I've identified here actually is a trend. Perhaps in a future post I'll look at the use of No Award in previous Hugo years to see if anything similar emerges.

I can imagine why you might disagree with the premise of Best Series, but what are your issues with the Best Editor categories...?

ReplyDeleteAlso, what software are you using to generate these graphs?

Two things. 1) I think where possible, Hugos should go to works not people, because otherwise it becomes too much about the personality, not the work. 2) Editing is too difficult to judge externally, esp. in Long Form, where editors' identities aren't really advertised. (It's different in Short Form, where the editor is the public face of a mag, or credited on an anthology.) Tor puts editors' names on the copyright... sometimes... but that's about it. I think being a good editor is mostly about promoting your writers, and otherwise being invisible, and getting an award seems to cut against that.

DeleteThe finalists for Best Editor seem to come from a very small pool: same people and same publishers every year. An editor for Orbit has only made the ballot once, even though at least one book, usually two, from Orbit made the Best Novel ballot every year from 2011 to 2018. They aren't self-promoting and aren't involved in fandom the same way, say, Tor editors seem to, and so they don't get the awards.

I used to think I'd like to see a Best Magazine (replacing the unwieldy Best Semiprozine as well as Best Editor (Short Form)), but really, magazines are awarded when their fiction wins. I do see the argument for Best Anthology/Collection. Best Editor (Long Form) should just go away.

DeleteOh, and the graphs were all done with a minimum of effort and skill in Excel.

Interesting. I am one of the voters of no-award over 2 less controversal winners. As first time voter I voted for-times no award, 2 times the work made last place and left the editors blank.

ReplyDeleteFor Series you are right I only read the expert provided and for Planetfall the first book.

Interesting enough my priorities were different than most. (Semipro/Fancast and Series were the last I voted in, Short Story I did have a ballot on my PC before I had the Package (and maybee even my membership)